Heart failure (HF) is one of the leading causes of hospitalization and mortality worldwide. In developed countries, most institutions have established HF programs to improve quality of care and outcomes. However, there are many possible interventions and it is not clear which are the most effective in improving outcomes. HF services are usually modeled according to local policy requirements and budget limitations, which may increase the uncertainty about their effectiveness even more. This article highlights the importance of conducting periodic quality assessments on performance and outcome measures as the most appropriate way to ensure that HF programs are achieving their intended goals. For this purpose, we propose an evaluation strategy based on the Avedis Donabedian model (for the quality evaluation of health care interventions) and controlled quasi-experimental study designs.

DETERMINANTS OF THE EFFECTIVENESS OF HF PROGRAMSAs the world's older population continues to grow, chronic heart diseases are becoming the most common diseases seen by cardiologists in their daily practice. Patients with chronic conditions have frequent hospital admissions and account for a large proportion of health care costs.1 To improve chronic patient care and control costs, the need for a change in the health care system is increasingly recognized, moving from the classic model, based on acute admission, toward an integrated system that can ensure continuity of care from the inpatient to outpatient settings, including community care, rehabilitation, and social care.2

Important steps have been taken toward the integration and multidisciplinary care of HF in many developed countries. For more than a decade, clinical guidelines have recommended the development of dedicated teams to improve outcomes in chronic HF patients.3 The main objectives of these programs are to ensure a seamless transition of care from inpatient to the community, provide evidence-based treatment in a timely manner, facilitate access to specialized care, and enhance patient knowledge in self-care.

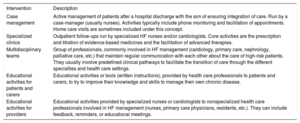

Systematic reviews evaluating the effectiveness of integrated programs for chronic patients including HF services show, by and large, positive results in terms of cost-effectiveness and patient outcomes.4 HF services may reduce HF readmissions, mortality, and costs, and improve patient adherence and satisfaction.5 Nevertheless, HF services may differ substantially from one to another depending on the interventions they provide and, thus, their effectiveness (table 1). A systematic review published by the Cochrane Collaboration in 2012 found that there is limited evidence to support HF units that are mainly based on physician-led HF clinic follow-ups. In contrast, those based on case management and multidisciplinary teams were successful in reducing readmissions and mortality.6 Other studies, however, have reported different results. The COACH study,7 one of the landmark trials that compared a nurse-led HF program to usual care (follow-up by a cardiologist), did not show any benefit in mortality or HF readmissions. A recent network meta-analysis published in 2017 comparing transitional care services for HF patients8 concluded that nurse-led home visits was the most effective intervention to reduce mortality and readmissions, with the greatest pooled cost-savings, followed by case management and specialized clinics. Other interventions such as telemonitoring, education in self-management, and pharmacist-led interventions had no impact on clinical outcomes. A systematic review of randomized control trials (RCTs) evaluating integrated tools for chronic patients in Spain9 showed that the most effective interventions are home visits, education in self-care, and case management.

Main clinical service interventions developed to improve outcomes in patients with chronic HF

| Intervention | Description |

|---|---|

| Case management | Active management of patients after a hospital discharge with the aim of ensuring integration of care. Run by a case-manager (usually nurses). Activities typically include phone monitoring and facilitation of appointments. Home care visits are sometimes included under this concept. |

| Specialized clinics | Outpatient follow-ups run by specialized HF nurses and/or cardiologists. Core activities are the prescription and titration of evidence-based medicines and the facilitation of advanced therapies. |

| Multidisciplinary teams | Group of professionals, commonly involved in HF management (cardiology, primary care, nephrology, palliative care, etc.) that maintain regular communication with each other about the care of high-risk patients. They usually involve predefined clinical pathways to facilitate the transition of care through the different specialties and health care settings. |

| Educational activities for patients and carers | Educational activities or tools (written instructions), provided by health care professionals to patients and carers, to try to improve their knowledge and skills to manage their own chronic disease. |

| Educational activities for providers | Educational activities provided by specialized nurses or cardiologists to nonspecialized health care professionals involved in HF management (nurses, primary care physicians, residents, etc.). They can include feedback, reminders, or educational meetings. |

HF, heart failure.

One of the causes behind the controversy on the effectiveness of HF services is the lack of appropriate description of program components.4 For example, “nurse-led interventions” can vary from administrative tasks (case management, telemonitoring surveillance, telephone contact) to clinical (home visits, nurse-led clinics) or educational interventions (education imparted to patients, carers or providers involved in HF management). The absence of a detailed definition of the components of successful HF services may lead to erroneous conclusions about program effectiveness and limit the application of research results in real-world clinical practice.

Another limitation of the clinical evidence on HF programs stems from the weak study designs commonly used to evaluate their effectiveness. Nonrandomized study designs with a before-after approach are typically used to demonstrate reduction in outcomes after program implementation. However, they are known to have poor internal validity as they are largely affected by biases and confounding factors.10 RCTs have the strongest ability to determine causality, in other words, to correctly attribute outcomes to the assessed intervention. Nevertheless, nonblinded designs carry selection biases and are exposed to contamination, which can also limit their conclusions.

Better reporting of interventions and the introduction of more appropriate study designs should enhance understanding of what drives the beneficial effects of HF services. Nevertheless, it is unlikely that any single specific HF model will be suitable. It is also likely that the same interventions carried out in different geographic areas may have a different magnitude of effect on clinical outcomes. For example, telemonitoring services may be a crucial component in rural areas with limited access to health care, but may not cause any incremental impact on outcomes in urban areas. In addition, the number and the complexity of interventions that a HF program can provide will always be influenced by local policy, institution particularities, and budget constraints. Therefore, providers will always need to adapt programs to respond to institutional requirements and contextual factors.

As HF services and the contextual factors are unique across regions, strategies and their effectiveness should be individually seen/assessed. HF services are intended to improve quality of care, and therefore they should be subject to periodic local evaluations, with simple but robust study designs, to ensure that the model is truly accomplishing the expected goals. These periodic assessments should help identify the most beneficial (or useless) components in that particular area in order to maximize their potential benefit.

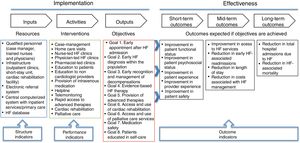

HOW TO EVALUATE THE EFFECTIVENESS OF A LOCAL HF SERVICEIdentifying program components and objectivesIn 1966, Avedis Donabedian laid down the basis for the quality assessment of health care interventions.11 His model, which remains the dominant framework for assessing quality in health care, divides programs into 3 parts: structure, process, and outcomes. Structure consists of the resources granted for program development: logistics, number of staff (specialized nurses and physicians), number of clinics, the availability of a help-line or short-stay unit, etc. Process is the result of the interaction between health care professionals and patients. In other words, it is the sum of activities performed in a health care setting (eg, diagnosis, treatment, education). Finally, outcomes are the effects of intervention on patient health and resource utilization. These 3 components are connected in such a way that outcomes will happen if activities are optimally performed, which happens when appropriate resources are available.

The evaluation of a HF unit requires the identification of the 3 Donabedian components. It is advisable to graphically illustrate them to facilitate communication and agreement among all involved health care professionals and stakeholders. Based on the Donabedian framework, The Centers for Disease Control and Prevention Division for Heart Disease and Stroke Prevention published a guideline to help clinicians to design evaluation strategies of health care interventions through the development of a logic model.12 In figure 1 we illustrate how this model can be used for the evaluation of HF services.

Example of a logic model designed for the quality assessment of a heart failure (HF) service. A basic logic model has 2 sections: the program implementation and the effectiveness evaluation. The first part has to describe the structure or resources, the activities that need to take place, and the outputs or goals that are supposed to be obtained. The second part describes the outcomes in the short-, mid-, and long-term that the program is intended to achieve.

HF programs, similar to other interventions aiming to improve quality of care, are based on assumptions. We believe that certain resources allow the performance of activities that will positively impact population health. The probability that these assumptions or “beliefs” become true depends on the strength of the scientific evidence behind them. For instance, a service formed by a specialized HF team coordinated with primary care and with provision of home care visits and case management is likely to improve patient outcomes as the evidence that links these interventions to positive outcomes is strong. In contrast, HF services based on telemonitoring and HF clinic follow-ups are less likely to be effective in the context of a well-developed primary care system, but may be effective in the absence of such a system. The model needs, therefore, to be explored and discussed relative to the context and resources as inaccurate or overlooked assumptions may lead to costly interventions with no impact on patient health.

Once the intervention components are identified, the next step is to select indicators to measure them. An indicator is a measurable element that has been demonstrated to correlate with quality of care. A number of indicators have been proposed by scientific societies to measure the quality of HF services.13 Indicators that measure structure include the number of HF clinics, the number of staff, and the presence or absence of qualified specialist nurses. Some of the most common process or performance indicators are the percentage of patients whose left ventricular ejection fraction was assessed before discharge, the percentage of patients on angiotensin-converting enzyme inhibitor and beta-blocker medications, and the percentage of discharged patients who receive an early follow-up appointment.

Finally, to evaluate program effectiveness, outcomes in the long-, mid-, and short-term need to be selected and evaluated. Measuring program effectiveness is an essential part of the evaluation strategy as outcomes are the ultimate dimension of quality. The most common outcome indicators are the 30-day or 3-month readmission rate and the HF-related mortality rate. Outcomes that are “relevant” to patients (also known as Patient-Related Outcome Measures [PROMS]) are increasingly recognized by international organizations as a fundamental part of interventions aiming to improve health in patients with chronic disease.13 In HF, PROMS include measurements of quality of life, psychosocial status, functional capacity, and burden of care. More ambitious and comprehensive evaluations can also address other quality dimensions such as patient safety, improvement in access, cost-effectiveness, humanity (eg, patient experience), and equity.

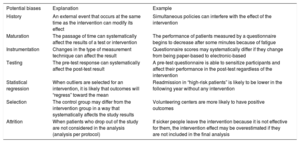

Assessing HF outcomes through quasi-experimental study designsRCTs are the most powerful study designs to assess causality.14 Nevertheless, when addressing the evaluation of health care interventions, RCTs may not be a suitable design for several reasons. Firstly, randomization of a quality intervention might be considered unethical if part of the population is not exposed to it for years until the study finishes. Although this inconvenience can be mitigated with stepped-wedge designs (where all individuals or study sites receive the intervention although sequentially or in “steps”), such trials are expensive and complex to conduct. Secondly, the establishment of an HF program is usually promoted by public institutions, which usually lack funds to carry out RCTs to assess effectiveness. Public resources, when available, are usually assigned to project implementation but the evaluation phase that should follow is often neglected. Last, RCTs are no longer an option for the evaluation of programs already implemented. In this scenario, nonrandomized studies are commonly used to analyze effectiveness. However, clinicians need to be aware of their limitations and potential biases to avoid inappropriate conclusions (table 2).

Threats to internal validity that may affect health care evaluations

| Potential biases | Explanation | Example |

|---|---|---|

| History | An external event that occurs at the same time as the intervention can modify its effect | Simultaneous policies can interfere with the effect of the intervention |

| Maturation | The passage of time can systematically affect the results of a test or intervention | The performance of patients measured by a questionnaire begins to decrease after some minutes because of fatigue |

| Instrumentation | Changes in the type of measurement technique can affect the result | Questionnaire scores may systematically differ if they change from being paper-based to electronic-based |

| Testing | The pre-test response can systematically affect the post-test result | A pre-test questionnaire is able to sensitize participants and affect their performance in the post-test regardless of the intervention |

| Statistical regression | When outliers are selected for an intervention, it is likely that outcomes will “regress” toward the mean | Readmission in “high-risk patients” is likely to be lower in the following year without any intervention |

| Selection | The control group may differ from the intervention group in a way that systematically affects the study results | Volunteering centers are more likely to have positive outcomes |

| Attrition | When patients who drop out of the study are not considered in the analysis (analysis per protocol) | If sicker people leave the intervention because it is not effective for them, the intervention effect may be overestimated if they are not included in the final analysis |

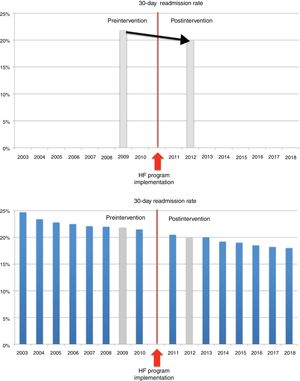

One traditional method commonly used to analyze the effectiveness of already established HF services is a pre-post or before-after study. In this model, outcome measures (for instance, mortality or 30-day HF readmission) are measured before and after the intervention is introduced. If there is any significant difference between the pre- and post- time points, it is assumed that the change is due to the intervention. Pre-post studies are simple to design and allow conclusions to be drawn easily. Nevertheless, they are intrinsically weak to establish causality as they cannot address possible bias or confounding factors.15 Clinicians need to be cautious when interpreting pre-post study results as changes in outcomes may just be secondary to secular trends or co-occurring events. One common bias that affects outcome research in HF programs is statistical regression. HF patients, typically at high-risk of adverse events, are likely to move toward the mean without any intervention.16

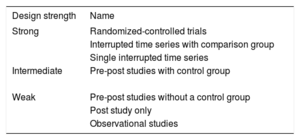

Due to the intrinsic weaknesses of pre-post studies, other quasi-experimental designs are arising as alternatives for outcomes research when RCTs are no longer an option (table 3).

Hierarchy of study designs according to their ability to establish causality

| Design strength | Name |

|---|---|

| Strong | Randomized-controlled trials |

| Interrupted time series with comparison group | |

| Single interrupted time series | |

| Intermediate | Pre-post studies with control group |

| Weak | Pre-post studies without a control group |

| Post study only | |

| Observational studies |

Interrupted time series designs aim to detect whether an intervention has been able to significantly modify the underlying trend of an outcome. They need a minimum of 8 time points before and after the intervention is implemented to be able to attribute changes in the trend to the intervention. In this way, interrupted time series designs can control most of the biases that affect pre-post studies, including statistical regression (figure 2). Quasi-experimental designs can be strengthened if the studied population is compared with another group in which the outcomes are expected to have a similar trend without the intervention (for example, patients discharged with HF as the main diagnosis from another institution where a specific HF service is not available). Another advantage is that routine health administrative data (usually collected for billing purposes or diagnosis-related group classifications) are well suited for these designs as they are typically recorded at regular intervals, which can reduce the costs associated with the program evaluation. In addition, as the analyses are simple and the effects are graphically represented, they are illustrative and intuitive, and useful to communicate with different stakeholders.

Outcome research about heart failure (HF) readmission rates before and after a HF service implementation with pre-post (above) and interrupted time series (ITS) study designs (below). The upper graph shows the result of a pre-post study on early HF readmission before and after implementation of a HF program. The intervention can be considered effective if this reduction is attributed to it. The second graph shows conclusions drawn from the same intervention using an ITS as study design. It can be seen how the inclusion of additional data points revealed that the readmission rate was already decreasing and it is likely that the intervention had no impact on this outcome.

HF programs are a good example of new initiatives that aim to improve health care delivery for patients with chronic conditions. There is substantial heterogeneity in both the interventions that can be offered and their clinical evidence to improve outcomes. Moreover, geographical characteristics and local policies influence the way these services are modeled across regions. To ensure that newly implemented HF services are indeed improving outcomes, health care professionals and stakeholders should be encouraged to assess their effectiveness by local quality evaluations. For that purpose, logic models and controlled quasi-experimental study designs are useful tools to obtain nonexpensive but high-quality evidence about the effectiveness of HF services.

CONFLICTS OF INTERESTNone declared.